The victory last month of AlphaGo, Google’s program for playing the ancient game of Go, against world champion Lee Sedol, was revolutionary precisely because it replaced human intuition with a method of self-learning that surpassed human intelligence.

Image Credit: A screenshot from a Youtube clip Nature Video

On March 15, in a nondescript room in the Four Seasons Hotel in Seoul, the human race was handed a stinging defeat. The Go tournament between world grandmaster Lee Sedol, a South Korean, and AlphaGo, Google’s computer Go program, ended with a 4-1 victory for the machine. Lee apologized to the viewers, to the Korean people, and maybe to all of humanity. “I’m the one who lost here, not the whole human race,” he said at a press conference at the close of the tournament. But the shock and sadness that filled the room told the real story: the abilities exhibited by the computer during the tournament were set to redefine the balance of powers between human and artificial intelligence. The ramifications of this victory will affect each and every one of us.

In each such competitions, there is always a defining moment. In the contest between Lee and AlphaGo, that moment came during the second of five matches, on the 37th move. The day before, after a very evenly balanced duel, AlphaGo had won match No. 1. Now, in the sterile setting of the hotel room in Seoul, after long minutes of charged, buzzing silence, AlphaGo announced a move, and the human player moving the pieces at the computer’s orders placed a black stone in the largely empty area on the right side of the board, on point 4L.

The tournament was broadcast live on YouTube: On the right side of the screen was the tournament itself; on the left, the two commentators, who were standing up. They appeared to be in shock. One of them took a step back in surprise. They kept looking over at the screen where the game was being shown. One placed a black stone on the board, moved it, moved it again, before finally setting it on point 4L and saying: “That has to be a mistake.”

And then, on the right side of the picture, the camera focused on the seat of Lee Sedol, the second-highest ranking Go player in the world, ranked 9-dan, the highest level. The chair, however, was empty. The seconds continued to tick off on the white clock in the corner of the screen, but it would take Lee 15 entire minutes to recover from AlphaGo’s move and return to the game, only to lose to the machine 174 moves later. Move 37 was a “divine move” in Go terminology, the definitive moment when the computer’s path to victory was paved, in what was considered the hardest game for a machine to win.

Twenty years ago, in a hotel room in Chicago, chess grandmaster Garry Kasparov, the preeminent player of his generation, sat down to play against IBM’s Deep Blue computer, in the first match of its kind. Kasparov emerged from the competition with a 4-2 victory.

A year later, in May 1997, the two faced off again for a rematch, proposed by Kasparov. Kasparov handily won the first game, but from the start of game 2, Deep Blue was dominant. Then, on the 37th move, the computer moved its rook from C2 to E4 in a totally startling move. Ten moves later, Kasparov was done for. Over the four next games, Kasparov gradually lost his cool. Three games ended in a draw, before the human lost again in game 6 – thereby losing the match. The pictures of him clutching his head in his hands, pushing his chair back and leaving the room looking utterly stunned became iconic images in the great saga of man versus machine.

Garry Kasparov contemplates a move against Deep Blue, IBM’s chess playing computer, during the second game of their six game rematch, May 4, 1997, in New York.

Garry Kasparov contemplates a move against Deep Blue, IBM’s chess playing computer, during the second game of their six game rematch, May 4, 1997, in New York. AP

But it’s only by lifting the lid on these machines that have defeated these acclaimed paragons of human intelligence that one can really appreciate the quantum leap that artificial intelligence has made in the 20-year blink of an eye.

Simple – but not

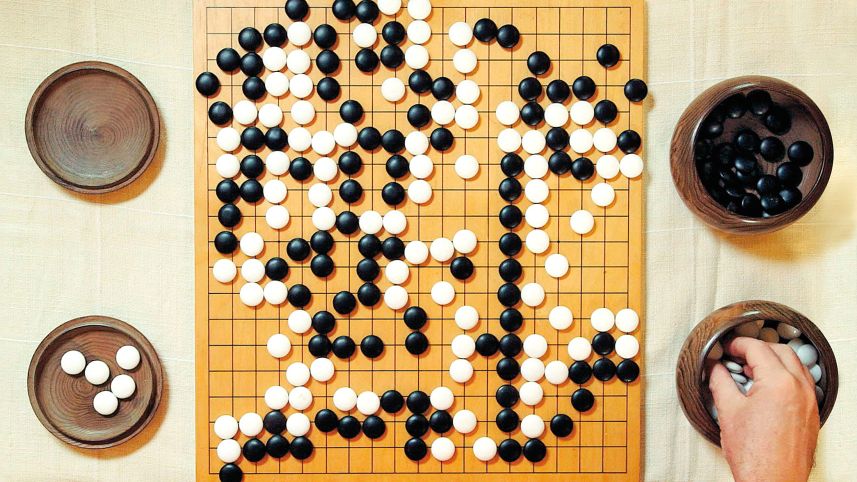

Go is a 3,000-year-old Chinese game that is popular mainly in East Asia. It is played on a 19-by-19 square grid, on which one player moves round black stones and the other uses white ones. All the pieces are otherwise identical and of equal value, and once placed cannot be moved. The object is to seize as much territory on the board as possible by strategically arranging the pieces and capturing the opponent’s pieces by surrounding them within these areas. At the end of the game, the winner is the one whose pieces hold the most intersections, or points, on the board.

Go is seemingly a very simple game with a minimal set of rules. But it’s precisely that great degree of freedom that makes it complex, abstract and extremely difficult to predict. There are 20 possible opening moves in chess. In Go, there are 361. From the first move onward, the possible sequences keep growing exponentially.

When the numbers are so big – in chess, after the third move, there are already more than nine million possible plays – these differences may not seem that consequential: The number of possible moves in each game is larger than the number of particles of matter in the universe, and computing power in its present form cannot solve them completely.

But the quantitative difference between the two games becomes a qualitative difference. By the standard calculations, the number of possible chess solutions is 10 to the 50th power, while for Go it is 2 x 10 to the 170th power. This difference doesn’t just make Go bigger and more complex than chess, it turns into a whole other thing entirely.

The breadth and depth of the game-playing possibilities in Go make the calculating component – the attempt to try to predict how the game will unfold – impractical. So Go players need to rely on other principles to guide them in finding the best move to make each time. These principles are based upon feeling. Since in Go one can’t really talk about strategy that the player can plan and implement, in order to win, the world’s best Go players use the cognitive element known as intuition.

Computers can make millions of calculations per second without a single mistake, but they lack intuition. And this is the reason why, before the contest began, Lee Sedol was so confident that he would prevail.

Deep Blue was a monster of brute force computing power, capable of evaluating 200 million moves per second. It was a supercomputer – one of several hundred in the world at the time, a research project that began at Carnegie Mellon University and was acquired by IBM in 1989. And it was programmed from the start for one purpose only: to beat world grandmaster Garry Kasparov at chess.

In the 1950s, when the number of possible moves in that game was first estimated, it was essentially proved that chess could not be solved by a “brute force” calculation alone, and that the search had to be narrowed to the most relevant moves. So the Deep Blue developers distilled information from hundreds of thousands of games of the top players into guidelines and chess heuristics, which Deep Blue then used to allocate its resources. It knew the best players’ games, and it had phenomenal computing power.

AlphaGo is digital-age technology. It lives in the “cloud,” which gives it tremendous calculating capacity via an Internet connection. It has no unusual hardware, and was not written for the express purpose of winning at Go. But its general algorithm enables it to learn the game in a very similar way to how people learn it.

AlphaGo’s main algorithm is constructed of two neural networks whose job is to scan the board and assess the outcomes of various possible moves. The “policy network” enables AlphaGo to calculate more moves ahead than any other algorithm. The “value network” enables it to ascribe higher values to moves with greater potential. The structure and programming of these two networks imitates the structure of the neural networks of the brain, with the specific bonds between them being strengthened or weakened in accordance with the reinforcement received as a result of the learning process. This is known as the principle of reinforcement learning.

AlphaGo was programmed to perform supervised machine learning. Its “training set” consisted of data from thousands of professional Go games. But unlike Deep Blue, which had a set of rules programmed into it from the start that became its sole operating manual, AlphaGo used the games shown to it by its human programmers only as a jumping-off point for a deeper study of the game, learning that it performed all on its own, in a completely unprecedented manner. Due to Go’s endless complexity, there is no practical method to distill the game into a set of rules and strategies. The only way to give the computer the ability to play Go at an advanced level is to get it to develop these strategies on its own.

Which is just what AlphaGo did. For a year it played simultaneously against 50 changing derivatives of itself – millions upon millions of games, much more than any human being had ever played – and after each win or loss, it updated its neural networks. A year of such training was enough to enable it to beat the world’s best human player, who was relying on 3,000 years of the game’s recorded history and 20 years of personal experience.

While human beings are constrained by the limits of the human body, the human brain and human consciousness – a limited calculating capacity, cognitive heuristics, ingrained ways of thinking, previous experience, expectations, feelings, fatigue, tension, anxiety – AlphaGo is up against no such thing. It can navigate all of the game’s possibilities with total freedom. Its distilled algorithms enable it to scan all the games’ moves and strategies without being affected by human experience or expectations. It can freely move beyond all the possibilities that we have deemed relevant. It is liberated from any human restrictions on thought and imagination.

Deep Blue was deliberately programmed to win at chess. It did so because it played in exactly the way that people taught it to play, taking advantage of its tremendous computing power. AlphaGo was programmed to be a learning machine. It won at Go – the holy grail of complete information problems – because it taught itself to play the game.

Era of the learning machine

Artificial intelligence is the general name for a number of computer science disciplines that aim to enable computers to behave in manners that mimic human intelligence. A slightly more cynical description would be to say that artificial intelligence is the group of problems in computer science for which there are presently no good solutions: As soon as the computer achieves a certain benchmark in a subcategory of artificial intelligence, it is subsumed within existing technologies and loses its original label.

A cat or a bowl of ice cream?

A cat or a bowl of ice cream? The Google algorithm knows. Social media

The Google search engine, the Facebook feed, the bots Siri (Apple) and Alexa (Amazon), as well as the robo-vacuum that cleans your house for you – all are based upon algorithms that were originally developed as part of artificial intelligence research. Practically every advanced digital application now makes use of technologies that emerged from this “ghetto”: pattern recognition, picture processing, natural language processing, handwriting identification, robotics and neural networks.

Machine learning algorithms are now standard digital practice, especially with those who process big data. The victory by AlphaGo’s algorithm was so dramatic, however, that it unmistakably marks this as the age of the learning machine. This is a whole new era, for computers can now acquire abilities that cannot be defined by a finite set of rules. While many of these capabilities – speech, facial identification, driving, folding laundry – might be considered mundane where humans are concerned, they are incredibly complex for computers.

Deep Mind, the developer of AlphaGo, was bought by Google two years ago for $500 million. AlphaGo is basically the company’s experimental platform. Deep Mind’s mission was to develop a “comprehensive artificial intelligence system” – i.e., a multipurpose learning computer. The Master Algorithm. It may take decades to fully achieve this vision, but we will see its initial successes much sooner. The better that computers get at solving imperfect information problems, the more of an impact machine learning will have. Some forecasts say that nearly 200 million jobs could be lost to computers in the next couple of decades.

After the triumph over Kasparov, IBM dismantled Deep Blue and it never played again. Kasparov never really recovered from the loss. For years, he claimed that IBM played dirty, that there was a flesh-and-blood player working with Deep Blue behind the scenes, coming up with the brilliant moves that ultimately led to its win. Following the stinging defeat, Kasaparov and other chess experts claimed that Deep Blue “didn’t play like a computer, it played like a human. This wasn’t computer chess.”

Last month in Seoul, when AlphaGo played its mind-blowing 37th move, it was hard to tell whether it had made a fateful mistake or a move so brilliant and unexpected that no one in the history of the human game had ever conceived of it. But another Go champion who was watching the match along with millions of other viewers, instantly grasped the momentousness of the occasion: “It’s so beautiful, so beautiful,” he said, during the televised coverage. “No human would ever make such a move. It’s a move that only a computer could come up with.”

Twenty years ago, Deep Blue’s winning move was so surprising because it resembled a human move. AlphaGo’s winning move was so revolutionary, however, precisely because it was superhuman: The games that the algorithm played against itself created new moves of which the computer was able to avail itself in order to win. By definition, these were not human moves.

Lee Sedol was right, apparently, in believing that AlphaGo does not possess intuition in the human sense. But it didn’t need it: Its very lack of intuition enabled it to play in such a counter-intuitive manner. AlphaGo’s power derived largely from its capacity to play the game in a way that no human ever would. It learned to play this way itself, and when we try to gaze inside its “brain” as it vanquishes the best human competitor available, we have no way of knowing what it’s “thinking.”

Report Courtesy Haaretz